Underwriting with superpowers: parsing claims data with OCR

Bottlenecks in traditional insurance underwriting

Shepherd is a technology-powered insurance provider for the commercial construction industry. We support large-scale businesses, generally performing greater than $25M in revenues. In contrast to a previous generation of consumer / personal lines insurtechs, we pride ourselves on a rigorous underwriting process that values speed and quality equally. For those who are new to insurance lingo, “underwriting” is the process of evaluating risk to determine what coverages an insurer is willing to provide, and how much an insurance policy should cost.

But first, let’s talk about the basic workflow: the underwriting process initiates with a submission from an insurance broker – usually through email – which contains all of the essential information about the prospective company along with its insurance needs. The important information is often stored in unstructured Excel and PDF documents, which can take hours or even days for an underwriter to review. From there, an underwriter must perform manual data entry to extract all key information and input into the pricing platform. This is a laborious and painful underwriter experience (UWX) to say the least.

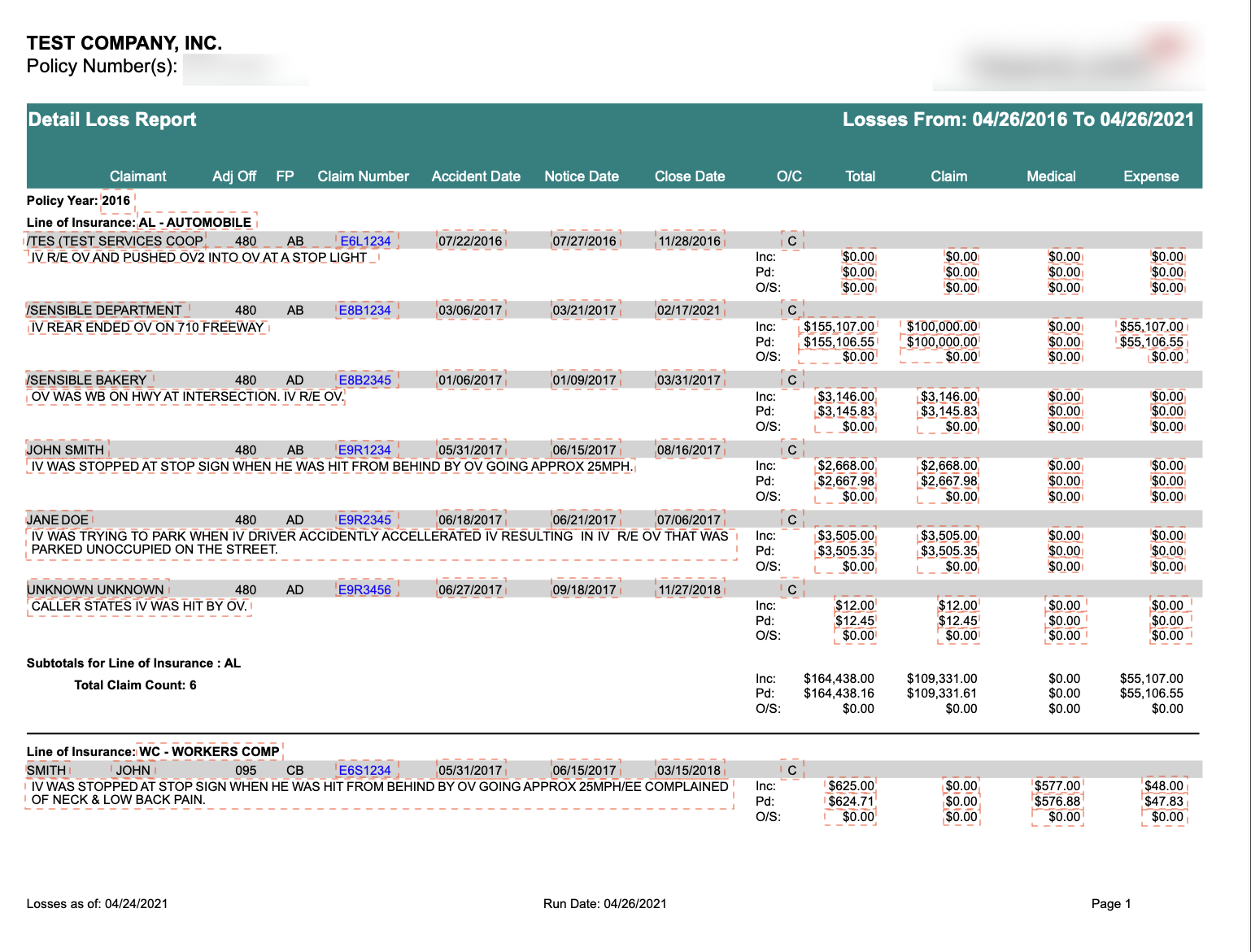

One of the key pieces of information in submission files is called loss runs - a complete listing of every claim the business has incurred over the past several years including the amount each prior insurance carrier has already paid for those claims or currently holds in reserve (in anticipation of future payments). This data is a critical indicator for modeling the likely future performance of this company and perhaps the most important information sent in a single submission. It turns out the lack of structure around this data presented our team with its greatest data entry bottleneck – adding hours to our reviews as we scaled up. We decided to challenge ourselves to solve this by developing a scalable process to extract claims data from PDFs with the highest accuracy and speed.

*An example loss run, with the red rectangles representing all of the data to be captured for a typical loss model.*

![An example loss run, with the red rectangles representing all of the data to be captured for a typical loss model.]

Traditional insurance providers solve the data problem by hiring a dedicated headcount to manually go through PDF files and copy over the data into their underwriting models by hand. At Shepherd, we chose a different path because we view this as a technology problem. Our engineering team embarked on a journey to build an automated solution that streamlines the process with the following first principles:

- Accuracy

- Extensibility (to various carriers)

- Maintainability

- Speed

At the core of the problem is the fact that each insurance carrier outputs loss runs in their own unique format – there is no standardization of claim data in insurance (seriously!). This means that our solution is required to easily extend to various PDF formats. Finally, it was important that the solution would be easy to maintain for a lean engineering team; we didn’t want to create more problems than we solved. We wanted the UWX to feel magical, and what could be more magical than turning a dreadful task that took hours into seconds?

## Engineering a Solution

Our engineering team developed a robust, scalable, and deterministic solution that empowers Shepherd underwriters to upload loss-run PDF files directly into our underwriting platform. This tool automatically extracts claims data and persists in our platform without the need for manual data entry. Our solution achieves 100% accuracy for known carriers and provides an exponential improvement in speed compared to human data entry. For a loss run of 350 pages (on the larger side), it would have taken someone on our team at least 3 hours to parse by hand. The new solution is capable of accurately extracting all of the data in under 40 seconds, giving a 270x boost in productivity.

Maintaining an experimentation mindset was critical to our success, as we initially explored several AI-led approaches that seemed contemporary and highly scalable. Ultimately though they could not deliver on accuracy or speed. In this blog, we’ll explore how we landed on an Optical Character Recognition (OCR) driven approach for its speed, accuracy, and determinism.

Applications of AI exploded onto the scene in 2023 and remain top of mind here in 2024. At Shepherd, we believe that Large Language Models (LLMs) present a unique opportunity for startups and incumbents alike to reap massive productivity gains, especially in the insurance industry. We’ve experimented with several approaches described below and concluded that for the time being, LLMs are best suited for generative applications rather than extraction tasks where accuracy matters. Unfortunately, the hype has not lived up to our expectations for this use case of table extraction. We’ve found that LLMs do not produce a reliable output every time due to their non-deterministic nature, which is a dealbreaker for us because we want to be able to audit our work and reproduce the result.

Additionally, APIs for LLMs are still slow making it hard for us to deliver a snappy experience. During the experimentation phase, we tried the now classic RAG-based AI system to convert a PDF file into vectors, store them in a vector DB like Pinecone, and perform a similarity search to feed relevant context to the LLM. Once again, the accuracy was poor for complex PDF layouts like the sample shown here, however, we were impressed with how reliably well the LLM would output data in the JSON structure.

Next, we experimented with removing the vector database from the equation. The bet is to use a model with a larger context window hoping for improved accuracy. We parsed the document with a `pytesseract` OCR package and passed the raw output to a GPT-4-32K with heavy prompt engineering. This approach ended up yielding the best outcome so far but still nowhere near the 100% accuracy requirement. Out of all the AI approaches we’ve tested, paid solutions came closest to being a “magic bullet” that produced accurate results, however, latency was still an issue. Once LLM APIs become faster over time, these solutions will shine.

The solution that checked off most of our requirements was SenseML from Sensible, as it enabled us to build an accurate and fast experience for our underwriters. SenseML is an abstraction on top of OCR solutions from AWS, Azure, etc. that provides us with a JSON-based Domain Specific Language to extract data from a PDF as well as programmatic access via an API.

Below is our final implementation with SenseML for an example loss run. Sensible generates all of the bounding boxes from the DSL code we wrote. One of the most unique abstractions in SenseML is “sections” which allows you to capture repeated items in the document, and claims are a perfect use case for this as each claim would represent a “section”. Once we successfully defined a section, green brackets on the document denote a captured section, and from there we can capture individual fields using an array of methods like Regex, Row, and more.

For example, the following SenseML code will extract the name of the company “TEST COMPANY, INC” in the top left corner of the document by anchoring on “Policy Number(s)” text and capturing the text above.

```json

{

"id": "policyholder",

"method": {

"id": "label",

"position": "above"

},

"anchor": {

"match": {

"type": "any",

"matches": [

{

"text": "policy number(s)",

"type": "startsWith"

},

{

"text": "SAI number(s)",

"type": "startsWith"

}

]

}

}

}

```

While this direction yielded the best fit for Shepherd, there are two tradeoffs we made with this approach. SenseML works exceptionally well with PDF documents that have a homogenous format and little to no variance in terms of PDF structure. The moment we encountered a document that had a slightly different structure we had to build a new configuration. We suggest using this solution for consistent PDF structures (e.g. driver's license) where speed is a top priority along with strict accuracy, and determinism. The other minor tradeoff is learning a new domain-specific language, which can be daunting for small organizations and will require an upfront time commitment.

Looking ahead to the next challenge

At Shepherd, most loss runs derive from a small set of insurance carriers and we’ve built a dedicated SenseML configuration for the top 8 most popular use cases. This brute-force approach works reliably well, however, our solution is only valuable if the loss run originates from our core group.

Any non-traditional loss run creates a significant limitation to UWX, as every single minute of productivity counts and our goal is to reduce the time spent on administrative tasks to zero. If we find a need to engage with the long tail of smaller insurance carriers, it won’t make sense for us to build a dedicated SenseML configuration for every single one of them. This presents us with a fascinating opportunity to figure out how to build an arbitrary solution, possibly with the use of multi-modal LLMs. The models will be able to extract data from PDFs to the best of their ability. We don’t know exactly what that will look like but we know for certain that an experimentation mindset will be at the heart of it.

Stay tuned! If you are a software engineer interested in working on challenges like this - we are hiring :)